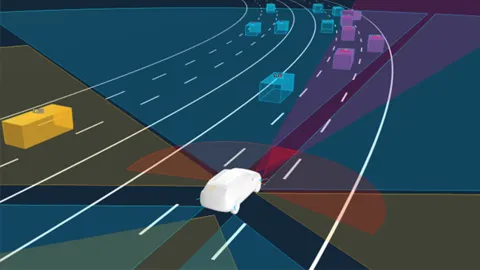

To make sense of complex environments, automated systems need more than just raw sensor data – they need context. High-level sensor fusion brings together already-interpreted inputs – like detected objects – from multiple sensors into one cohesive view. This makes it faster, more efficient, and easier to scale. It also strengthens system reliability through redundancy, simplifies the integration of different sensor types, and supports modular development across diverse applications.

Smarter Development, Faster Results

By processing each sensor independently, developers can reuse proven algorithms, simplify debugging, and accelerate prototyping. There’s no need to synchronize raw data streams, which lowers complexity and cost. With tools like AVL Scenario Simulator™, fusion strategies can even be tested virtually, eliminating the need for expensive physical validation.

Typical Applications of High-Level Fusion

From ADAS and autonomous driving to logistics and robotics, high-level fusion is key to real-time, reliable perception in dynamic environments. It supports fast decision-making where adaptability is critical, especially in urban settings or complex operational spaces.

This webinar marks the beginning of a three-part series. Stay tuned for the upcoming sessions as we continue to explore how to overcome the complexity and safety challenges of developing ADAS/AD systems.

Watch now

Simon Terres

Solution Manager ADAS

AVL Advanced Simulation Technologies

Marko Mesaric

Analysis Engineer Software Services

AVL Advanced Simulation Technologies